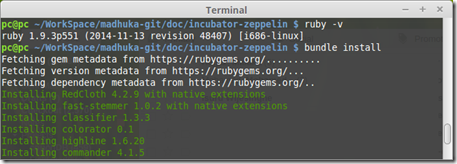

Pre - Requirements

- java 1.7

- maven 3.2.x or 3.3.x

- nodejs

- npm

- cywin

Here is my version in windows8 (64 bit)

1. Clone git repo

git clone https://github.com/apache/incubator-zeppelin.git

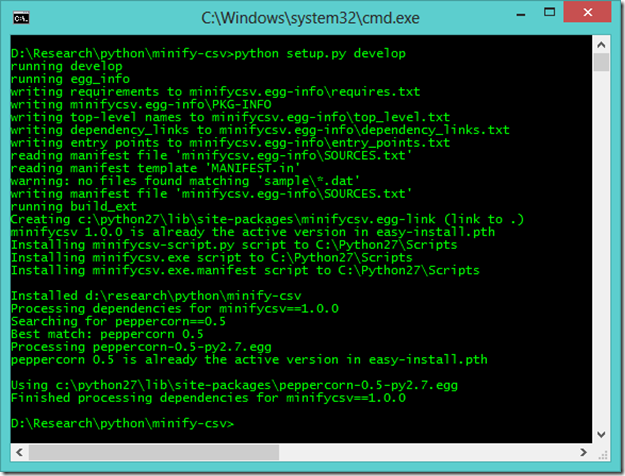

2. Let’s build Incubator-zeppelin from the source

mvn clean package

Since you are running in windows shell command or space in dir, new line issue in windows (Unix to dos issue) will break some test so you can skip them for now by ‘-DskipTests’. Used –u to get updated snapshot of the repo while it is building.

Incubator-zeppelin is build success.

Few issues you can face with windows

ERROR 01

[ERROR] Failed to execute goal com.github.eirslett:frontend-maven-plugin:0.0.23:bower (bower install) on project zeppelin-web: Failed to run task: 'bower --allow-root install' failed. (error code 1) -> [Help 1]

you can find 'bower' in incubator-zeppelin\zeppelin-web folder. So you can go for zeppelin-web directory and enter 'bower install' and wait till it complete.

Some time you will get 'issue in node-gyp' then check you nodejs version and nodejs location is it pointed correctly.

- $node –version

- $which node

Then you can get newest version of node-gyp

- npm install node-gyp@latest

Some time depending on cywin-user permission you have to install 'bower' if not.

- npm install -g bower

Error 02

[ERROR] bower json3#~3.3.1 ECMDERR Failed to execute "git ls-remote --tags --heads git://github.com/bestiejs/json3.git", exit code of #128 fatal: unable to connect to github.com: github.com[0: 192.30.252.130]: errno=Connection timed out

Instead to run this command:

git ls-remote --tags --heads git://github.com/bestiejs/json3.git

you should run this command:

git ls-remote --tags --heads git@github.com:bestiejs/json3.git

or

git ls-remote --tags --heads https://github.com/bestiejs/json3.git

or you can run 'git ls-remote --tags --heads git://github.com/bestiejs/json3.git' but you need to make git always use https in this way:

git config --global url."https://".insteadOf git://

Lot of time this issue occur deu to corporate network / proxy. So we can added proxy settings to git's config and all was well.

git config --global http.proxy http://proxyuser:proxypwd@proxy.server.com:8080

git config --global https.proxy https://proxyuser:proxypwd@proxy.server.com:8080

Error 03

You will have fix new line issue in windows. In windows new line is mark as ‘/r/n’.

for

for  and

and  .

.